GPU-Accelerated Deep Learning: Object Detection Using Transfer Learning With TensorFlow

In an effort to improve the overall health of the San Francisco Bay, Kinetica and the San Francisco Estuary Institute have partnered to deploy a scalable solution for autonomous trash detection.

Project Background

The need for an advanced solution to protect the San Francisco Bay has become evident with more and more plastic leaking into the estuaries. The San Francisco Estuary Institute (SFEI) has aimed to create an open-source, autonomous trash detection protocol using Computer Vision. The proposed solution is to use multiple drones to capture images of the Bay Area, and train a Convolutional Neural Network (CNN) to identify trash in those images. Once identified, the coordinates can be extrapolated, totals can be calculated, and teams can be deployed to quickly and effectively remove the waste.

The question is: how do you build and deploy this type of solution at scale where the results are effective and the costs are feasible?

Bearing that question in mind, there are several factors to first think about. Image processing can be time-expensive and resource exhaustive. Is there a way to create a real-time or near real-time solution that is scalable? If so, then what frameworks can be used to achieve this? What are the results and costs that are associated with this? Using Kinetica’s Active Analytics Platform and Oracle Cloud Infrastructure, the San Francisco Estuary Institute has developed a scalable, open-source solution.

Accelerated Object Detection Using Kinetica’s Active Analytics Platform

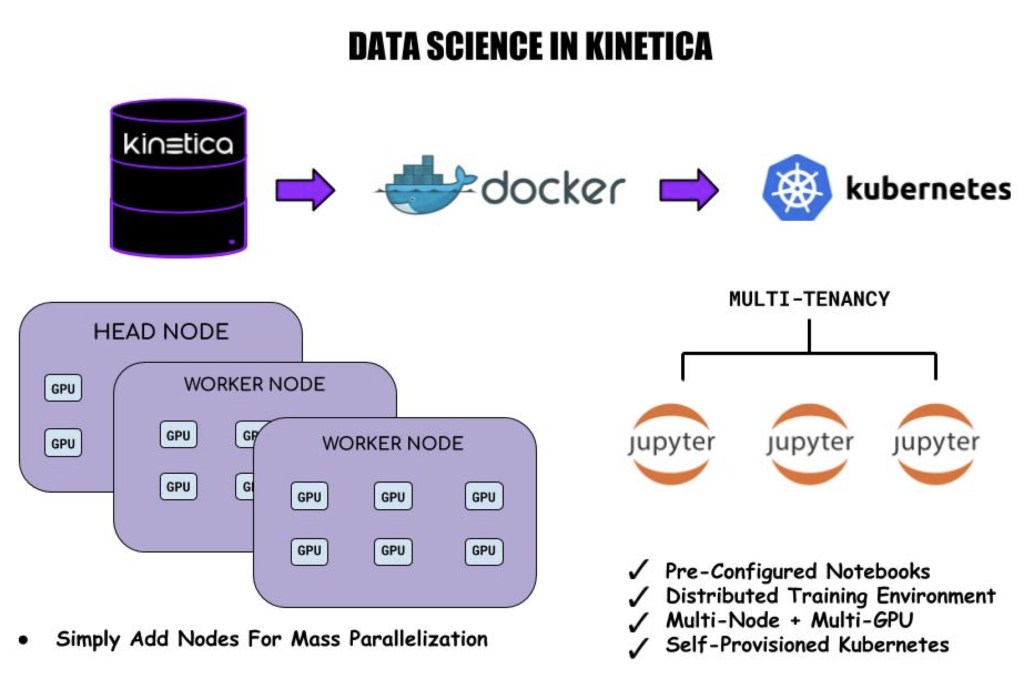

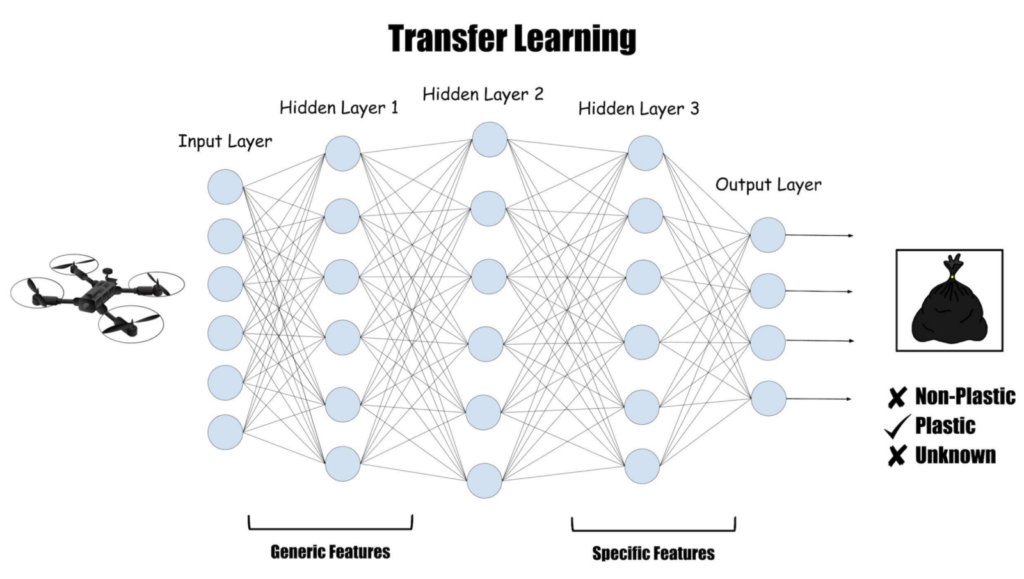

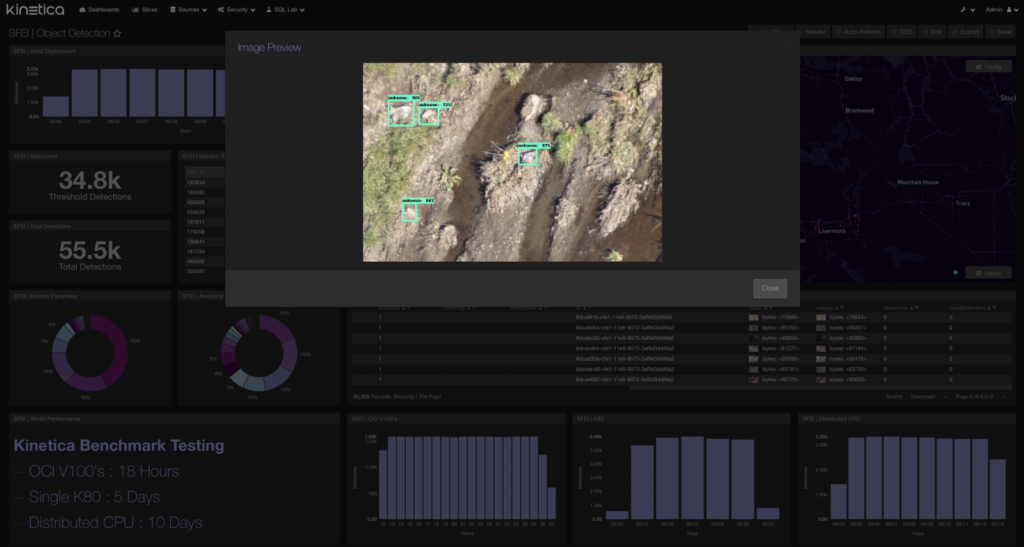

The first challenge this project poses is the task of training and deploying a convolutional neural network (CNN) to detect trash in drone images with performance that will scale. For this, SFEI uses GPU-accelerated transfer learning with TensorFlow. The graphics processing unit (GPU) has traditionally been used in the gaming industry for its ability to accelerate image processing and computer graphics. In this case, we can take advantage of the GPU’s extreme parallelization to rapidly train and infer on images provided by the drone. SFEI has partnered with Kinetica to leverage Kinetica’s Active Analytics Platform to operationalize this model. Kinetica is an in-memory GPU-accelerated database with a comprehensive data science platform that helps enterprises solve their most complex problems. Using Kinetica’s out-of-the-box toolkits, we were able to deploy this TensorFlow model, on an automatically orchestrated Kubernetes cluster in continuous mode, providing a method for streaming object detection. As images stream into Kinetica, the CNN infers against these images and identifies trash with a bounding box.

A typical data science workflow using Kinetica’s Active Analytics Workbench

Under the Hood

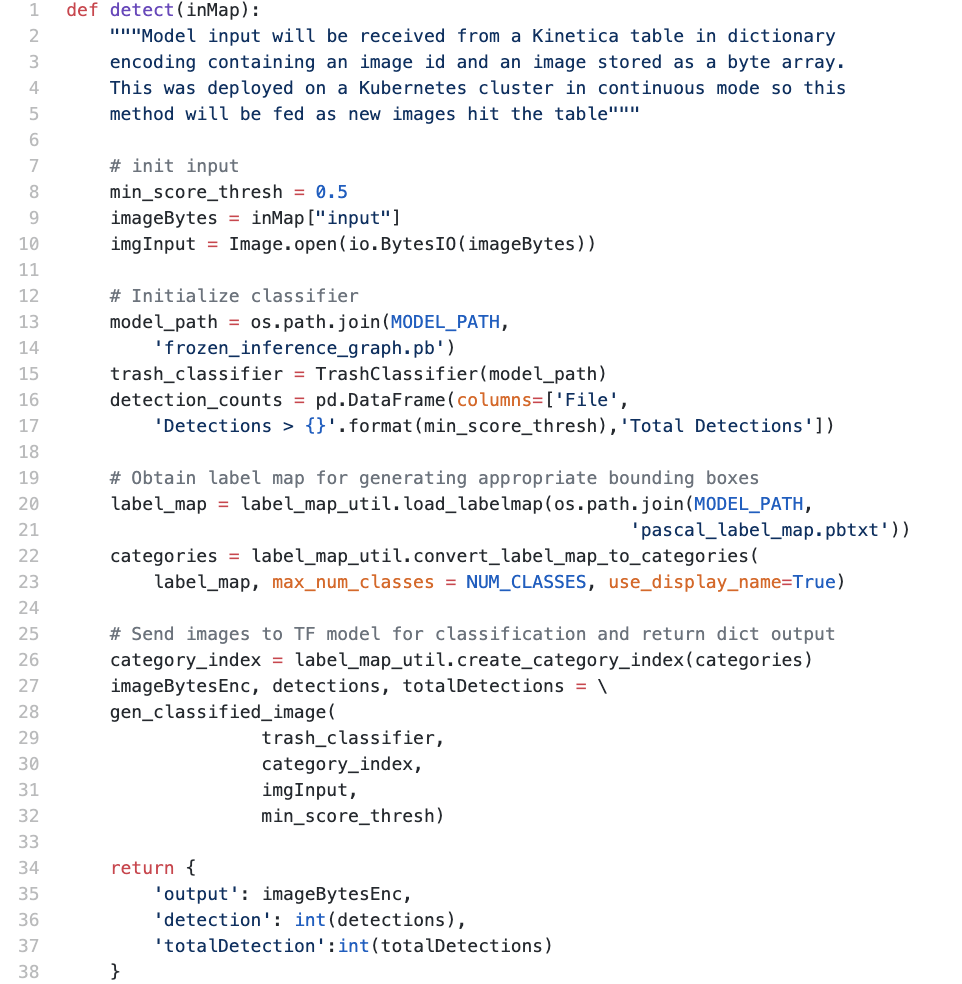

Now that we have developed an awesome convolutional neural network, capable of detecting trash in images from a drone, how do we actually take it to production and derive the value for which it was originally built? This is a challenge many data scientists and organizations face today. Let’s look at how we accomplish this in Kinetica.

- Development: Kinetica is agnostic to the languages or libraries used to build these machine learning or deep learning models. There are tools to deploy these algorithms whether a data scientist wants to use R, Python, Spark, RAPIDS, etc. Data scientists can train and adjust their models in pre-configured Jupyter notebooks, natively in Kinetica, or, work in independent IDEs of their choice.

- Docker: Docker is a pivotal piece to the flexibility of the training and deployment process. Regardless of the languages or libraries that were used, we can containerize those requirements along with the pre-trained model and publish the container to Docker for future extraction.

- Kubernetes: Kinetica’s Active Analytics Workbench runs on top of a Kubernetes cluster. This cluster can be automatically provisioned at the time of install, or users can bring an existing Kubernetes cluster by adding the k8 configuration file to the Kinetica cluster. When deploying a model, the Kinetica Black Box SDK automatically pulls the Docker container and launches it on Kubernetes, so users can simply enter the Docker URL.

These workloads can be carried out in on-demand, batch, or continuous mode. Users can build on-demand models directly into their active analytical applications that are called by an endpoint for instant what-if scenarios, forecasting, and more. Traditional batch processes can be churned through at mass scale and speed cutting down time-to-insight. Most importantly, streaming analytics in continuous mode is now a performant option by leveraging distributed GPU inferencing. In this mode, the model acts as a table monitor and as new data is ingested into the table, the model makes an inference and stores the results and auditing information in a table for evaluation.

Interacting With the Active Analytics Workbench

Example method that receives a dictionary of an image ID and an image, stored as a byte array, passes this input to the pre-trained CNN, and returns the output as a dictionary which is then stored in the results table.

Performance Benchmarking Using State of the Art Compute on Oracle Cloud Infrastructure

In order to benchmark performance we deployed this project three separate times and compared the results.

- Distributed CPU, replicating the model across available cores

- Single GPU on k80 architecture

- Distributed GPU on Oracle Cloud Infrastructure

SFEI provided months worth of drone data amounting to an image set of over 30k images of the Bay Area. Each iteration of testing was deployed in continuous mode. In their environment and existing infrastructure, they were able to analyze these images in roughly 17 days. Using distributed CPU, we churned through the image set in 10 days. This was a nice performance kick from Kinetica’s push-button model replication functionality but using GPU is where the real performance came into play. On a single GPU we brought the inference time down to 5 days. For the final test harness, our partners at Oracle provisioned us with an 8 GPU machine on Oracle Cloud Infrastructure. We distributed the workload across all 8 GPUs and ran the same test against the same image set. The inference completed in 18 hours and 26 minutes, resulting in a roughly 2200% increase in performance. That translates to ~2.2 seconds per image. Streaming object detection from drone images is now a scalable solution. Moving forward SFEI can implement multiple drones to cover more areas along the coast to expand the scope of the project. With Kinetica’s linear scalability, and the versatility of scaling with Oracle Cloud Infrastructure, this project will seamlessly expand.

Moving Forward: Open Source Solutions Using Transfer-Learning

The San Francisco Estuary Institute hopes to expand this project and make it available for public use.

“At SFEI, we run not only this particular trash-based model, but we have a number of people who run models. What Kinetica allows us to do is to accelerate the running of our models so that we can see the performance gains and allows us to tune those models at a much faster clip. That essentially makes what is currently impractical, if not impossible, practical and possible.” — Dr. Tony Hale, director of informatics, SF Estuary Institute

A big part of that vision will be the use of open source transfer learning.

The intent behind transfer learning is that we can use prior knowledge for tasks at hand rather than starting from scratch. In a convolutional neural network, multiple layers learn different features throughout the training cycle. In the first hidden layer, the network is trained to recognize very basic, generalized features. The idea is that we can freeze certain layers by fixing the weights or fine tune certain layers to meet the task at hand. By doing this, retraining can start from this fixed point and reduce overall training time as well as maximize performance. With that in mind, SFEI would like to make this project publicly available to help accelerate similar projects.

Reveal, Kinetica’s BI tool rendering drone images that have been analyzed by a GPU-enabled CNN

Big thanks to the SFEI team — Tony Hale and Lorenzo Flores, Sanjay Basu from Oracle and the Kinetica team — Daniel Raskin, Zhe Wu, Saif Ahmed, Julian Jenkins, Nohyun Myung, Antonio Controneo, Rebecca Golden, and Pat Khunachak for all of the hard work to make this project happen.

- Watch a demo of GPU-accelerated object detection for SFEI with Kinetica and Oracle Cloud

- Watch this customer story to see how SFEI leverages Kinetica and Oracle Cloud to keep the Bay Area clean

- In the news

There is more to come as we expand this project. Stay tuned…

Nick Alonso is a Solutions Engineer at Kinetica.

Making Sense of Sensor Data