Run complex queries on streams from Confluent in real-time

Kinetica is a GPU accelerated database that executes spatial, time series and OLAP queries on high-velocity data streams from Confluent Cloud in seconds, offering a robust architecture for observing and analyzing data in motion. This includes vehicles, networks, satellites, supply chains, smart cities, stock prices and more.

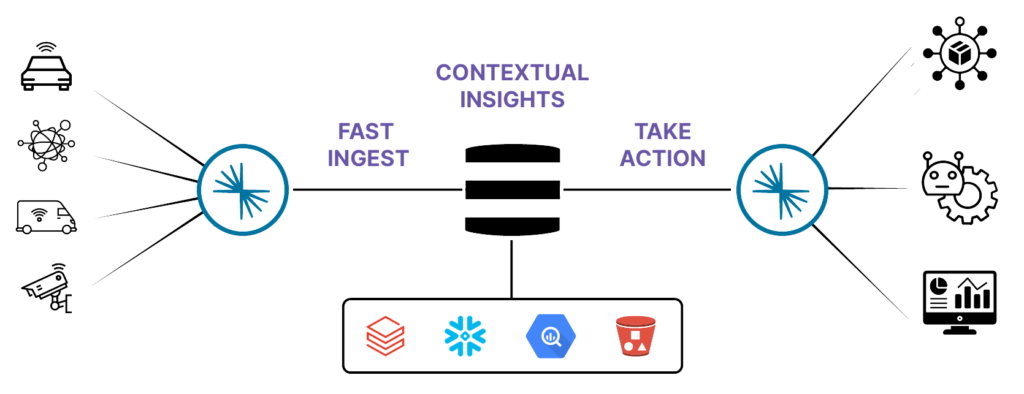

Combine sensor and machine data from Kafka with contextual data for advanced real-time analysis

Fast Ingest

Kinetica is able to keep up with high volume Kafka topics with it's lockless, multi-head architecture. Experience the unrivaled speed of data ingestion.

Contextual Insights

Kinetica and Confluent provide a landscape where data isn't just ingested; it's quickly understood in its spatial and temporal context, unveiling insights previously obscured.

Take Action

Kinetica can publish these enriched insights back to a Confluent stream to empower your organization to act decisively on the most relevant and contextual information.

Try Kinetica Now: Kinetica Cloud is free for projects up to 10GBGet Started »

Real-time Analysis of Objects in Motion

Stream data from sensors, mobile apps, IoT devices, and social media from Kafka into Kinetica. Combine it with data at rest, and analyze it in real time to improve the customer experience, deliver targeted marketing offers, and increase operational efficiency.

Gen AI on Confluent Topics

Write natural language queries against your Kafka streams. Kinetica's GPU architecture and native LLM delivers fast answers on real-time data. More »

Technology Report

Making Sense of Sensor Data

As sensor data grows more complex, legacy data infrastructure struggles to keep pace. A new set of design patterns to unlock maximum value. Get this complimentary report from MIT Technology Review:

Book a Demo!

The best way to appreciate the possibilities that Kinetica brings to high-performance real-time analytics is to see it in action.

Contact us, and we'll give you a tour of Kinetica. We can also help you get started using it with your own data, your own schemas and your own queries.